Automating Mastodon Posts with OpenAI's ChatGPT

Table of Contents

Introduction #

Mastodon is a decentralized social media platform that allows users to communicate and share content with others in a community-based manner. OpenAI’s GPT-3 is a powerful language model that can generate human-like responses to prompts. In this blog post, we’ll explore how to combine the two to automatically post interesting facts on Mastodon.

Prerequisites #

- Python 3.10+ and Pipenv installed

- A Mastodon access token (create one under

Development>Your applications>New application>Your access token) - An OpenAI API token

Dependencies #

We start by creating a new Python project with the following Pipfile:

1[[source]] 2url = "https://pypi.org/simple" 3verify_ssl = true 4name = "pypi" 5 6[packages] 7"Mastodon.py" = "1.8.0" 8openai = "==0.27.0" 9python-dotenv = "*"10 11[dev-packages]12 13[requires]14python_version = "3.10"We install the dependencies with pipenv install. We’ll use the Mastodon.py library to interact with the Mastodon API, and the OpenAI library to interact with the OpenAI API.

We’ll also use python-dotenv to load environment variables from a .env file. We create the file and add the following variables:

1MASTODON_INSTANCE="YOUR_MASTODON_INSTANCE" # e.g. https://mastodon.social/2MASTODON_BOT_TOKEN="YOUR_MASTODON_BOT_TOKEN"3OPENAI_API_KEY="YOUR_OPENAI_API_KEY"4POST_INTERVAL_SECONDS=3600 # 1hCode #

We start by reading the environment variables from the .env file created before, using the load_dotenv() function from the python-dotenv library. This makes it easy to set configuration values in a separate file without hardcoding them in the script.

1from dotenv import load_dotenv2 3load_dotenv() First, we import the Mastodon library, and setup the Mastodon instance with the access token:

1import os2from mastodon import Mastodon3 4# Setup Mastodon 5mastodon = Mastodon(6 access_token=os.environ['MASTODON_BOT_TOKEN'],7 api_base_url=os.environ['MASTODON_INSTANCE']8)Then we import the OpenAI library, and setup the OpenAI instance with the API key. We provide an initial prompt for our ChatGPT model and instruct it to reply with a random interesting fact:

1import openai 2 3# Setup OpenAI 4openai.api_key = os.environ['OPENAI_API_KEY'] 5prompt = "Tell me a random fact, be it fun, lesser-known or just interesting. Before answering, always " \ 6 "check your previous answers to make sure you haven't answered with the same fact before, " \ 7 "even in different form." 8history = [{ 9 "role": "system",10 "content": "You are a helpful assistant. When asked about a random fun, lesser-known or interesting fact, "11 "you only reply with the fact and nothing else."12}]Finally, we create a loop that will run forever, and post a new fact every hour:

1import time 2 3while True: 4 try: 5 history.append({"role": "user", "content": prompt}) 6 7 # Only keep the last 10 messages to avoid excessive token usage 8 if len(history) > 10: 9 history = history[-10:]10 11 # Generate a random fact12 response = openai.ChatCompletion.create(13 model='gpt-3.5-turbo',14 messages=history,15 temperature=1.3,16 )17 18 # Post the fact on Mastodon19 mastodon.status_post(status=response.choices[0]['message']['content'])20 21 except Exception as e:22 logging.error(e)23 24 # Wait for the specified interval before posting again25 time.sleep(float(os.environ.get('POST_INTERVAL_SECONDS', 3600)))The while loop runs indefinitely, posting a new status to Mastodon every POST_INTERVAL_SECONDS seconds. This value defaults to 3600 seconds (1 hour) if it is not set.

The loop appends the current prompt to the history list and removes any elements that are more than 10 items back in the list, to limit the token usage for the OpenAI API. It then calls the openai.ChatCompletion.create() method to generate a random fact.

The temperature argument is a number between 0 and 2, which defaults to 1. Higher values will make the output more random, while lower values will make it more focused and deterministic. Here we set it to 1.3 to get more interesting facts.

Conclusion #

In this blog post, we explored how to combine Mastodon and OpenAI’s ChatGPT to automatically post interesting facts on Mastodon.

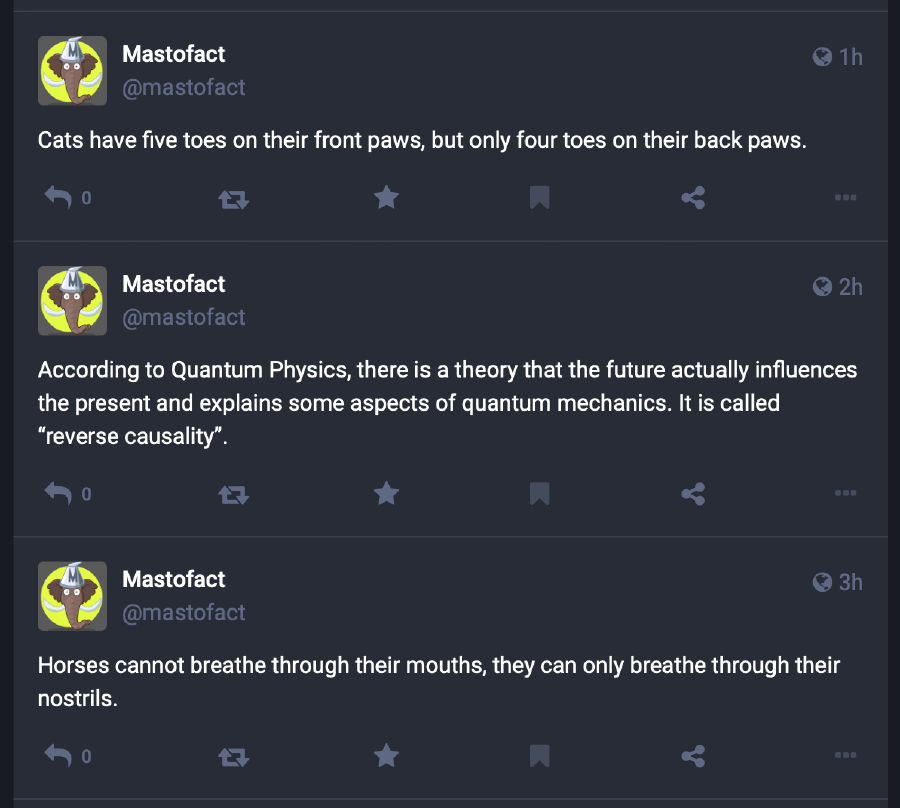

I hope you enjoyed it! You can find the full source code on GitHub. It’s currently running at @mastofact!